Client: Curiosity and Passion

Tools and framework: Oculus Quest, Unity, Pen & Paper, Microsoft MRTK, Microsoft Maquette, VR/AR, Visual Studio

Role: Prototyper, Researcher

--------

When I was first introduced to Virtual Reality in 2015, the setup was 3DOF tethered to bulky computer systems lacking controllers. Today, the whole system has evolved with capabilities like standalone mobile form-factor, inside out 6DOF tracking, controllers, and visual hand-tracking along with development tools and framework for the creators.

With the advancement of Mixed Reality, it demands new interaction definitions designed for the medium. Replicating current user gestures and interactions might not be a viable solution. Many potential future interactions for the medium are yet to be explored. Mixed Reality provides an immersive environment, thus, a proposed interaction should be realistic(or at least believable) according to the mental model of the real world. This work intends to explore such possible interaction models of simple tasks in mixed Reality.

Note: While these prototypes designs are in Virtual Reality, the concepts of hand-tracking and interaction apply to augmented reality headsets also without significant changes.

--------

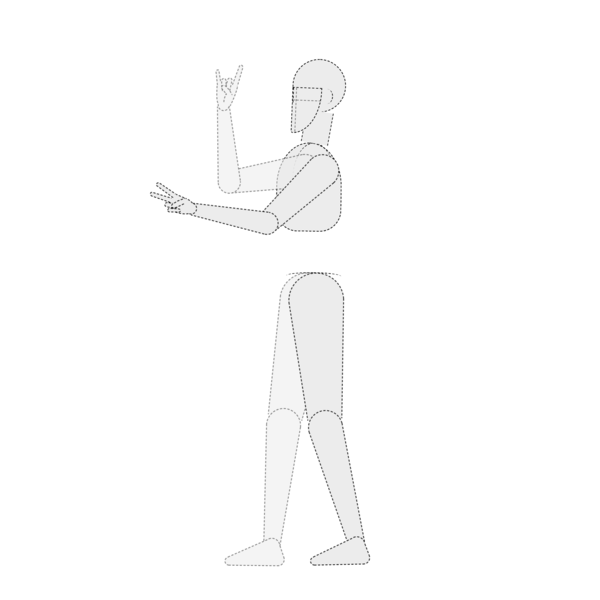

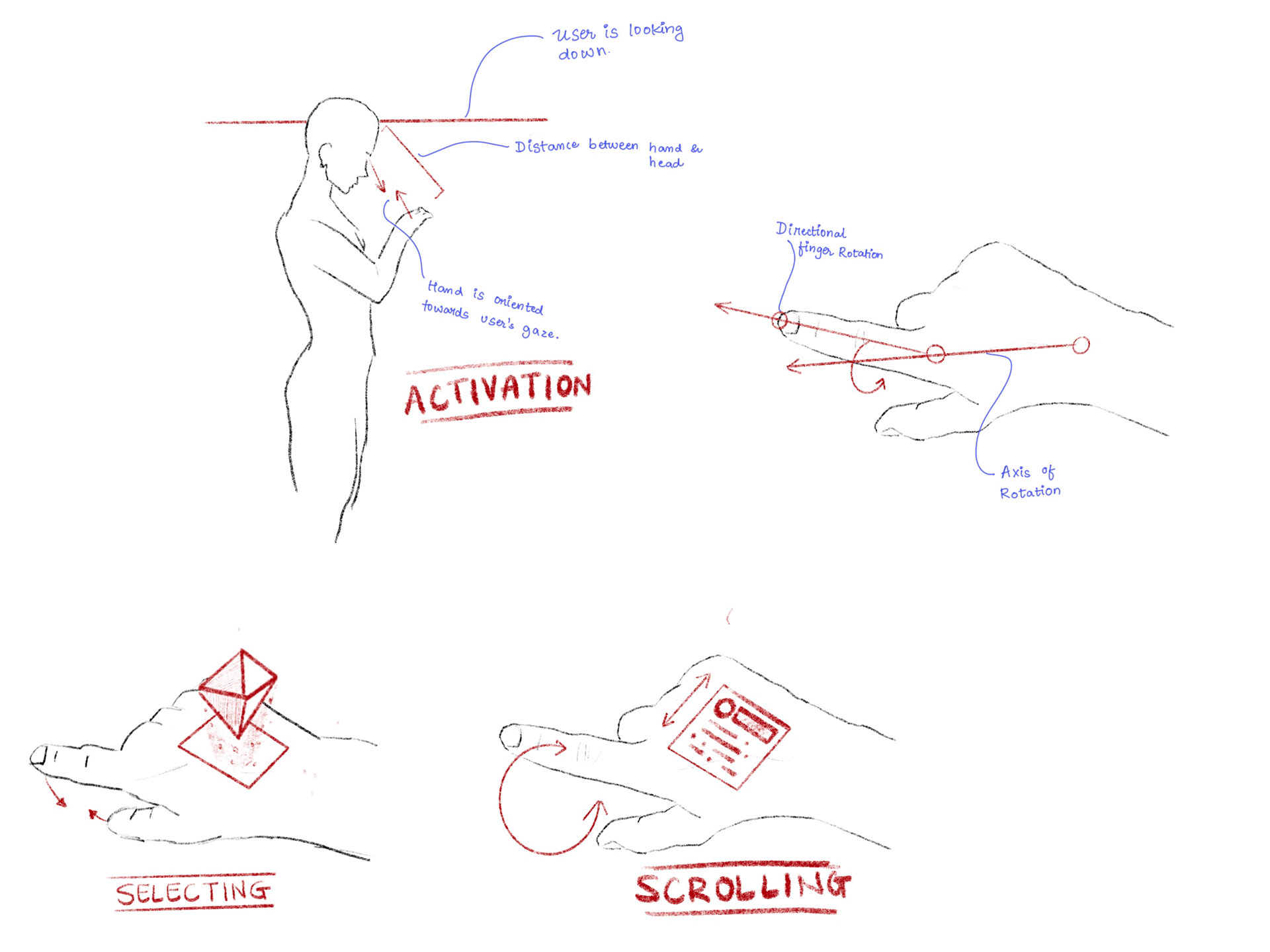

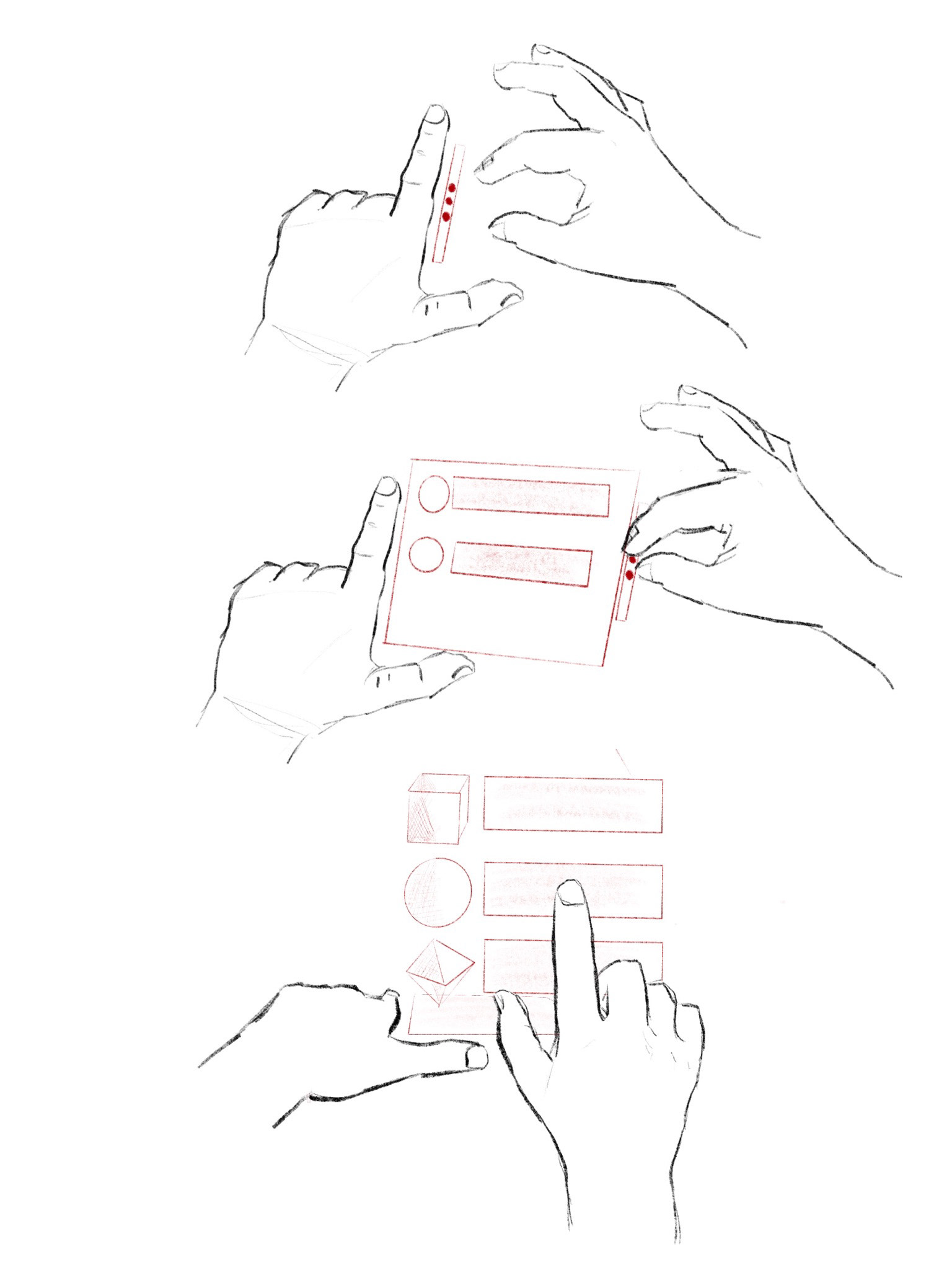

The human hand skeleton is composed of 27 bones articulated with at least one other bone via a synovial joint. In total, the human hand had 21 degrees of freedom of movement, including the wrist. The movement of fingers is not entirely independent. And each joint has its constraints. Upon observing various hand movements, the following parameters can engineer hand-interaction:

- Folding/Unfolding of arm (Elbow Joint movement)

- Hand Gestures or curl of fingers

- Micro-movement of hand fingers

- Arm movement through shoulder joint

FOLDING/UNFOLDING ARM

HAND GESTURES

MICRO FINGER MOVEMENTS

HAND MOVEMENT VIA SHOULDER JOINT

Blending different building blocks composes an interaction. For example, a hand gesture triggers the start of an operation, while the arm movement through the shoulder provides axis value. Micro-movement of a finger mapped to the process can be actuated based on the arm's fold and the finger's curl.

Below are some examples of building blocks.

Below are some examples of building blocks.

FOLDING/UNFOLDING OF ARM

POSITIONAL MOVEMENT OF HAND

MICRO-MOVEMENT OF FINGER

HAND MOVEMENT VIA SHOULDER JOINT

GESTURE RECOGNITION

CURL OF FINGERS

ROTATIONAL WRIST MOVEMENT

-------- -------- --------

Throw-To-Delete

With cmd+delete on computers, swipe away on phones, what will be the gesture to delete or close an entity in mixed reality?

--------

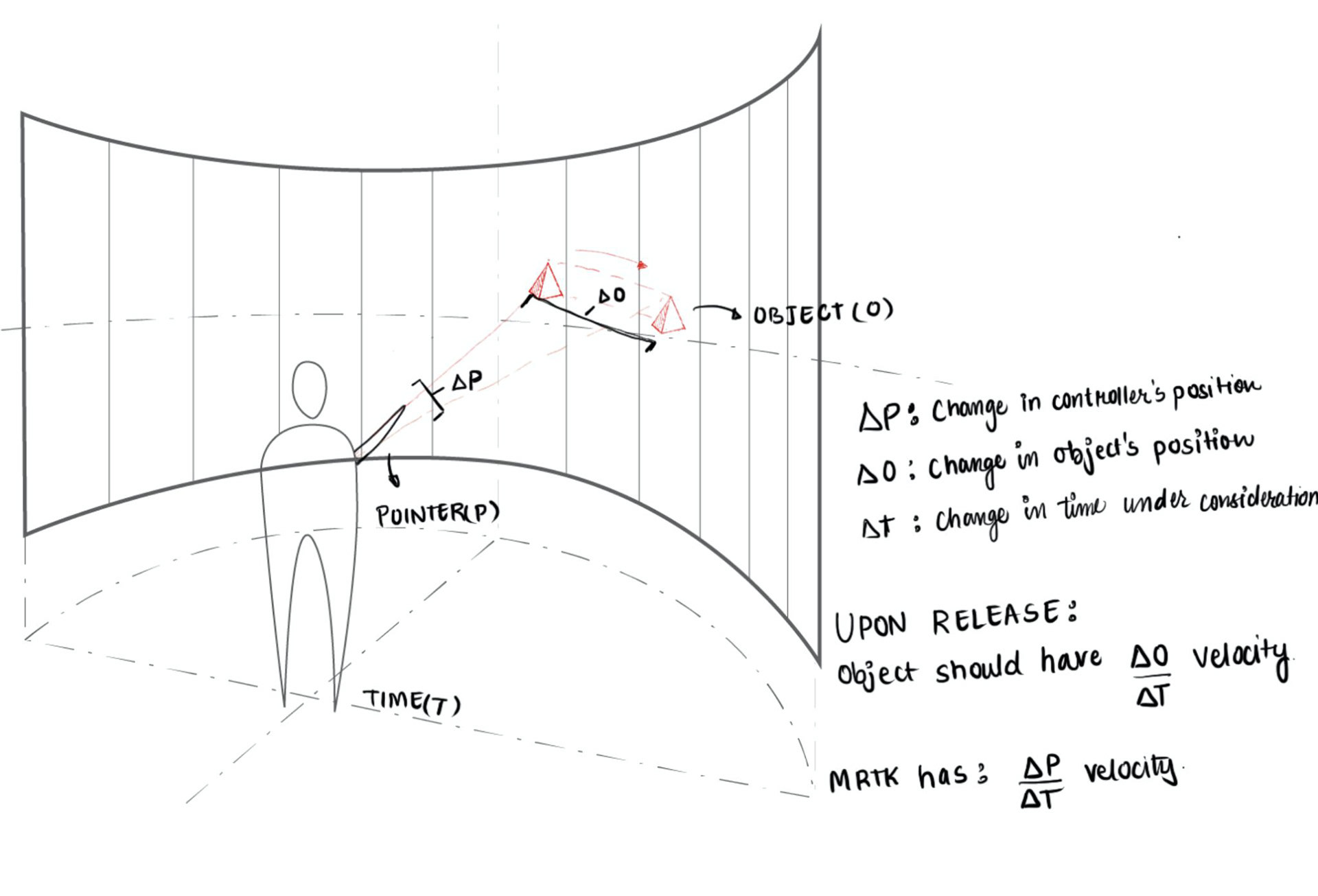

For throw-to-delete interaction, I prototyped a behavior of selecting far away object, bringing it closer to arm's reach for any operation, returning the entity, or throw-gesture to delete the entity. Summoning an entity from the list of elements like emails, immersive project reports, or just a widget, ability to inspect or operate with it, and then can either dismiss it by deleting or sending it back to its original location can be some of the use cases. For example, archiving an email directly from the list or closing off a widget that isn't required anymore.

-------- -------- --------

Gestured-Hand-Swipe-For-Undo-Redo

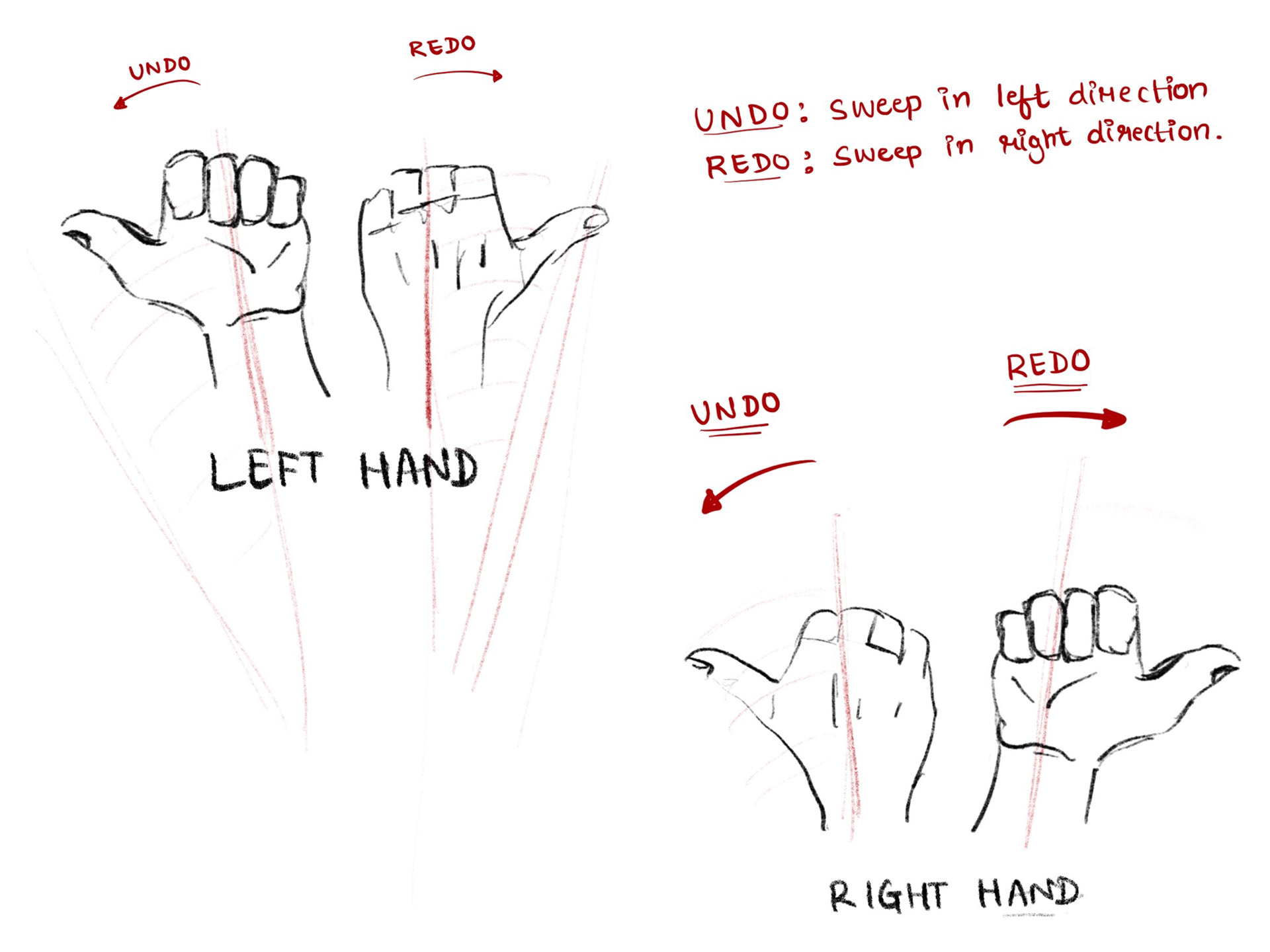

Different kinds of interactions execute Undo-Redo functionality like ctrl+z on a computer, “Shake To Undo” on mobile phones, or multi-finger swipe on a multi-touch display. What can be the interaction in mixed reality with hand-tracking?

--------

People move their hands while communicating - gestures are a fundamental part of human communication. In this prototype, I explore a gesture of thumb-out hand swipe in either left or right direction for Undo/Redo functionality. For the prototype, I’ve implemented a pinch brush to draw. Upon thumb-out hand swipe, once can carry out undo or redo operation. Its potential lies in many applications that require functionality to revert changes.

-------- -------- --------

Pinch-To-Rotate Dial

Sliders are good. They are ubiquitous on digital screens because of its natural affordance on a flat-screen. But dial has its own place. 6-DOF hand tracking in mixed reality enables natural interaction models.

--------

For pinch rotate interaction, I explored ways to change the value using a circular dial interface. As compared to sliders, the dial has its benefits like compact in size, definite/indefinite data range and other. This prototype implements a hand-interaction of tuning a dial by pinch-and-rotate hand movement. Use-cases include an operation that included tuning values or infinite scrolling—for example, changing volume or scroll through data (like an extensive photo library or a playlist like in-car infotainment system).

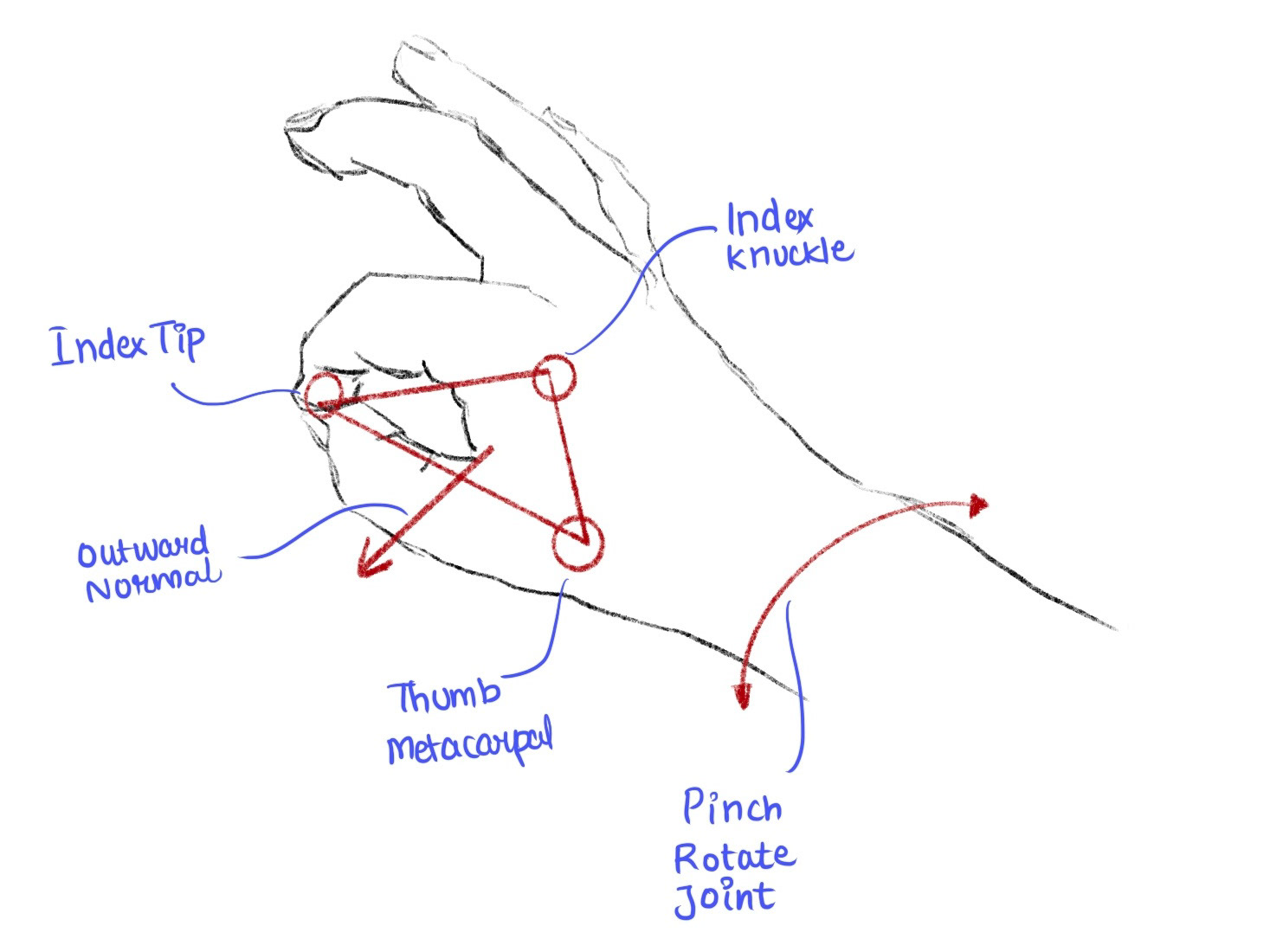

INDEX FINGER TIP ROTATION

THREE TRACKED POINTS FOR STABILITY

-------- -------- --------

Raise-To-View Notification

What can be the possible interactions for quick and easy access to notifications? Notifications by their mere role of informing user about new content or reminding about pending task- are an important digital service in our life.

--------

Before the digital devices, it was time for which we were raising the wrist to check, and with the digital mobile devices, we raise our wrist or hand to check notifications. For mobile, a familiar gesture is raising hand with palm facing towards the user, while for smartwatches, it is raising the wrist, and the dorsal part of the hand is facing the user. Exploiting the same mental modal, this prototype explores the raising of the wrist to access the notification center in mixed reality. This prototype implements index finger rotation to scroll through notifications. It is similar to a watch crown dial or thumb swipe on the mobile phone. Bringing hand back to its usual pose will close the notification center.

Notifications are a complex system and serve many purposes in digital services. This prototype is just the tip of the iceberg, exploring aspects like activation, placement, and scrolling. A good design would require a harmonious interplay of various interactions and visual designs.

Raise to Activate Notification Center

L Gesture Pull out display - Initial Sketch

-------- -------- --------

These prototypes are a part of weekly interaction design sprints. I'm committed to updating this webpage with future prototypes.

I will also publish a detailed breakdown of each prototype on my medium blog.