Client: AT&T

Tools and framework: Android ARCore, Unity, Tensorflow

Role: Machine Learning Engineer, Unity developer

Team size: 3

--------

Splash screen of the application

Location selection page

AR Voyager allows users to create immersive videos of themselves moving around inside high-resolution 3D environments—literally “teleporting” into a range of real and fictional locations.

The resulting content demonstrates the astonishing creative potential unleashed by the combination of 5G networks and Augmented Reality.

The demo consists of streaming 3D Environments leveraging the power of 5G into the user space. User while can explore the environment in 6-DOF, they can also flip the camera and then can take a selfie video in the same environment. This requires real-time rendering, real-time human segmentation, and video recording/processing. I was tasked for human segmentation in Augmented Reality experience within the bounds of Unity Game Engine.

Testing DeepLab Neural Network in Python

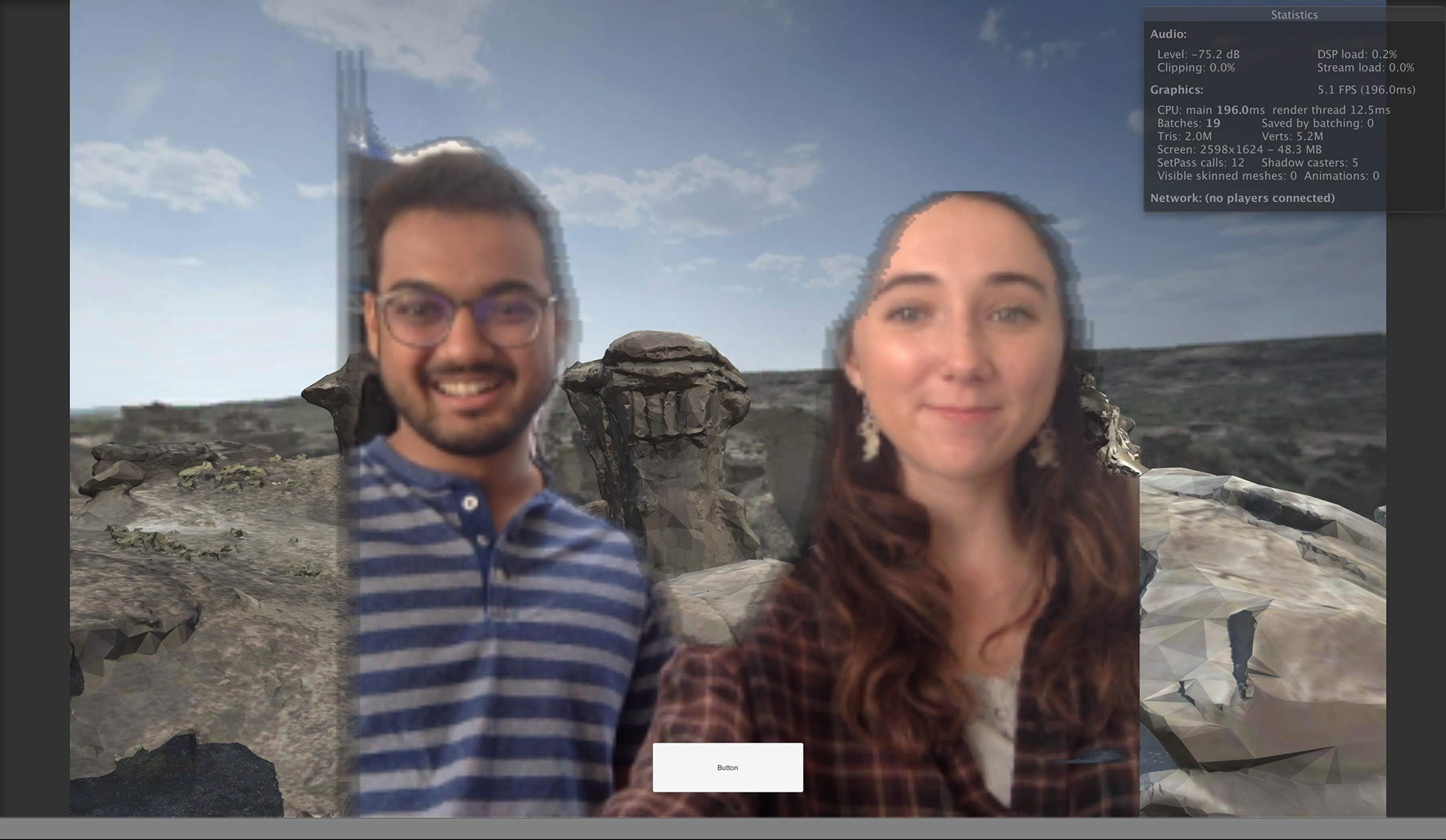

Integrating DeepLab model in Unity Game Engine

Human segmentation research started with some ideas like:

- Background Subtraction using OpenCV

- Face feature point tracking using OpenCV and face estimation to generate a rough segment

- Segmentation using depth-map from the camera (However devices lacked front-depth-camera)

- Neural net to generate segmentation map

My background in Image Processing helped me in learning and researching about neural net frameworks for semantic segmentation. With the help of Sara Hanson, I was able to integrate the TF model - DeepLab in Unity Game Engine for generating a segmentation map.

Testing per frame calculation time and segmentation of two humans

The next step was to generate a real-time segmentation map that required a lighter neural net model and integration of experimental TFLite in the Unity Game Engine. Thanks to the Unity Engineers who helped to integrate TFLite into the project. I and Sarah then took on the task to optimize the process flow from camera capture to the segmented image to achieve 30 fps.

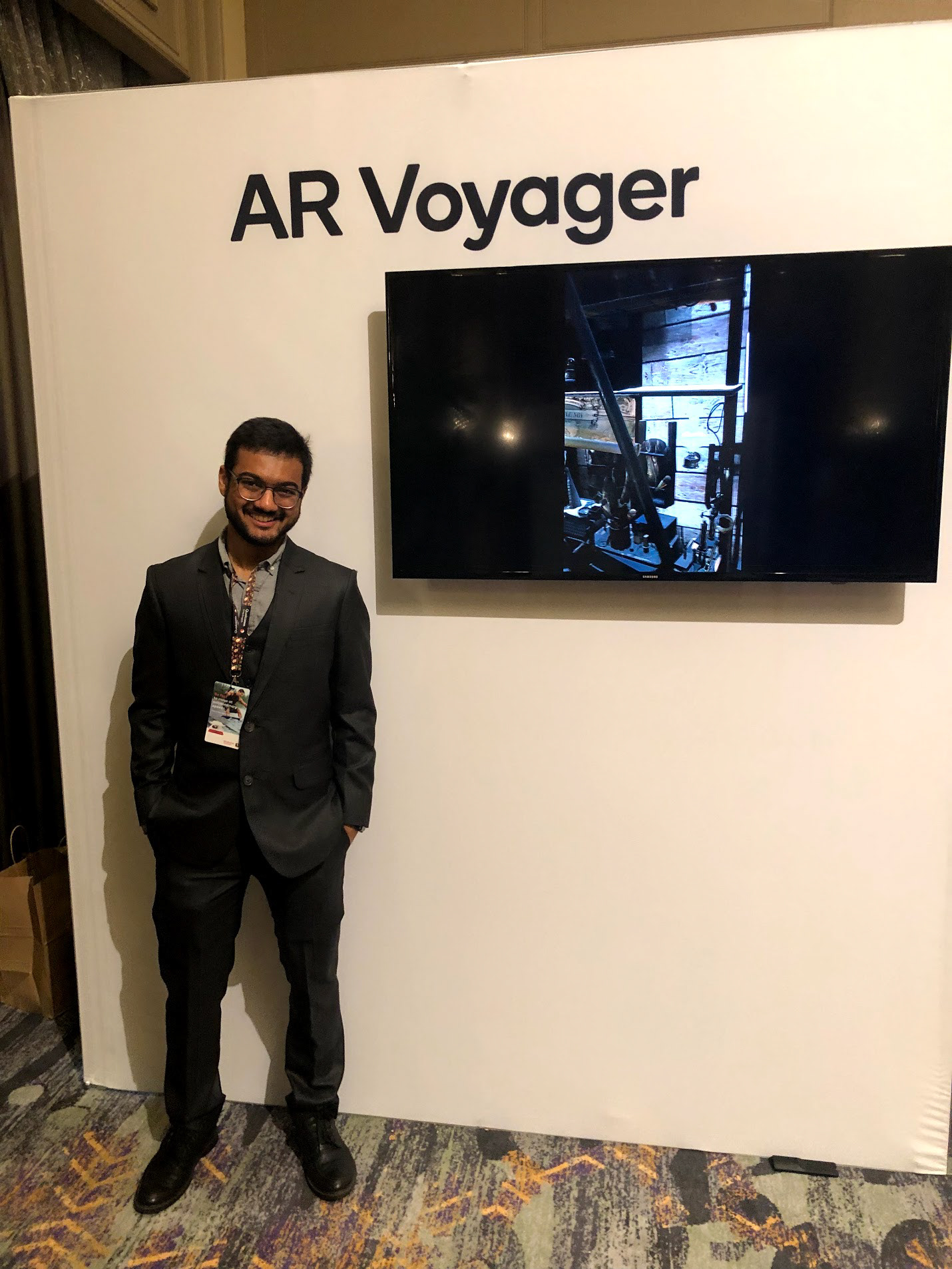

The project was demoed at Snapdragon Summit 2018 at Maui and received great responses from the viewers.

In the press: 5G changes how we work, play, and collaborate

Team relaxing after the summit

Chaitanya and Sara testing the performance of segmentation algorithm in Unity

AR Voyager at Snapdragon summit